- Abstract(ed)

- Posts

- A Prelude to Intents

A Prelude to Intents

Blockchain’s Third Wave is Upon Us

Where we are with Blockchains reminds me of a conversation with Marc Andreessen and Jim Barksdale. Back when the two were taking Netscape public, they were posed with the question- “How do you know Microsoft does not bundle a browser into their product?”. In somewhat iconic fashion, Barksdale answered with

Gentlemen, there’s only two ways I know of to make money: bundling and unbundling.

Looking back on that conversation, Barksdale jokes that he just said that to end the conversation. But it brings us to where we are in crypto today.

At its core, crypto and blockchains represent the unbundling of trust. Under the hood, we are seeing the unbundling of Blockchains themselves. In the modular v monolithic wars, a clear winner is emerging. In technology, the transition from Integrated to Modular has been a common theme, best captured by the late great Clayton Christensen in his Innovator series. Ben Thompson’s Stratechery captures Unbundling in 2017 and Bundling in 2020.

This bundling and unbundling captures the first 2 and a half iterations of blockchains pretty well. In this edition, I dive into the transformation of blockchains, from BTC to where we are today. This should serve as a good brush up on how blockchains have evolved from monolithic to modular.

Intent-centric architecture is, in many ways, the first architecture that is designed around modular, interoperable blockchains. I want this edition of Abstract(ed) to be a prelude to more targeted writings on intent centric architectures and forecasts. Before we dive into what is being called the Third wave of blockchains, let us quickly peruse through the first 2 (and a half).

P.S. - I won’t do a deep dive on Intents, or Intent Centric Architecture in this piece. At the end of the newsletter, I will highlight the core tenets.

Evolution of Blockchains

v1.0 : Bitcoin i.e. Scriptable Settlement

(The first “bundle” to unbundle Trust)

What was the Original Promise?

In recent times, we seem to have bastardised the original intention behind BTC. If we read verbatim from the whitepaper, the goal of Bitcoin was to create a “Peer to Peer Electronic Cash System”.

We should use these quiet times to get back to the roots of cryptocurrency.

It's not about building a casino of tokens that traders can speculate on to get rich quick.

It's about building censorship-resistance money, private transactions, and unstoppable community computers.

— Mustafa Al-Bassam (@musalbas)

5:23 PM • Sep 9, 2023

What was the Original Premise?

In 2008, when the whitepaper came out, we had just started to see the great collapse of the global financial system. Centralised institutions fuelled one of the most brutal recessions in modern time, that led to economic intervention from the Fed. If you want to get a full breakdown of that economic intervention and its implications, this is a good starting point.

TLDR- Play around with money supply indefinitely to delay solution of problems.

Bitcoin was built to give society a money system that was devoid of centralised trust. It gave us economic fabric that is apolitical, transparent and immutable. The core tenets of BTC are

Decentralization: Instead of relying on a central authority to maintain a record of transactions, Bitcoin would use a distributed network of nodes (computers) to validate and record transactions.

Cryptography: The whitepaper emphasized the use of cryptographic techniques to secure transactions and control the creation of new units of the digital currency. Public and private keys were introduced to facilitate secure ownership and transfer of Bitcoin.

Proof of Work: Miners would solve complex mathematical puzzles to validate transactions and add them to the blockchain. This process required significant computational power and energy, making it economically costly for malicious actors to attack the network.

Limited Supply: Bitcoin was designed with a capped supply of 21 million coins, which would be gradually released through the mining process. This scarcity was intended to mimic the scarcity of precious metals like gold and create a deflationary monetary system.

Peer-to-Peer Transactions: Bitcoin was meant to enable direct peer-to-peer transactions between users without the need for intermediaries. This would reduce transaction costs and increase financial inclusivity.

Overall, the Bitcoin whitepaper outlined a vision for a digital currency that would be open, borderless, censorship-resistant, and resistant to inflation. It aimed to provide a new way for people to engage in financial transactions and store value outside of traditional banking systems.

What were the limitations?

Bitcoin paved the way for scriptable settlement. The goal for Bitcoin was to be a P2P money system, and the scripting language was designed as such. This made it challenging to bring any form of programmability to Bitcoin. (Script is Non Turing Complete)

People tried to use BTC to represent value IRL, like “Coloured coins” or “BTC stock exchange”. While the apps worked, they were extremely clunky! The idea of building programmable applications that could inherit the same values behind BTC is what ushered in the second wave, pioneered by Ethereum.

(FYI, a new paper called BitVM just dropped, which brings very specific Turing Completeness to Bitcoin’s main chain. This is an exciting development that everyone must track!)

v2.0: Programmable Settlement i.e. Ethereum

(BUNDLING Programmability to Trustless Networks)

Vitalik, along with Yoni Assia and Meni Rosenfeld wrote a paper about encoding metadata to Bitcoin via Coloured coins. The premise was, we now have a ledger and a store of value. What information can be encoded into Bitcoins so as to leverage the immutability of Bitcoin? That paper became the catalyst for what we now know to be Ethereum.

The primary premise for the creation of Ethereum was to address limitations and expand the capabilities of blockchain technology beyond what Bitcoin offered. The white-paper articulated several key reasons for Ethereum's creation:

Lack of Programmability in Bitcoin: The whitepaper emphasized that Bitcoin's scripting language, while serving its intended purpose as a digital currency, was limited and not expressive enough to support complex applications beyond simple transactions. Ethereum aimed to introduce a more flexible and expressive programming language to enable a wide range of decentralized applications.

Smart Contracts: Ethereum introduced the concept of "smart contracts," which are self-executing agreements with the terms of the contract written in code. These smart contracts could automate a wide array of processes and interactions, from financial transactions to governance, without the need for intermediaries.

Decentralized Applications (DApps): Ethereum aimed to provide a platform for building decentralized applications (DApps). These DApps could leverage smart contracts to create decentralized services, games, marketplaces, and more, opening up new possibilities for innovation and user empowerment.

Turing Completeness: Ethereum's scripting language, Solidity, was designed to be Turing-complete, meaning it could handle any computation that can be described algorithmically. This gave developers the flexibility to build highly complex and versatile applications on the Ethereum platform.

Token Creation: The Ethereum whitepaper also mentioned the ease of creating custom tokens on the Ethereum blockchain using the ERC-20 token standard. This feature enabled projects to issue their own tokens for various purposes, including crowdfunding and decentralized governance.

Blockchain as a Global Computer: Ethereum envisioned a world computer where anyone could run decentralized applications without relying on a central authority. This concept aimed to democratize access to computing resources and reduce the power of centralized intermediaries.

So clearly, Ethereum honed in on providing programmability, in addition to the economic security and decentralisation that blockchains (Bitcoin) provided. This makes a lot of sense, given Vitaliks exploration of encoding meta data into BTC. The primitive expanded well beyond what Bitcoin could offer (from a programmability of complex outcomes stand point) to give us a suite of composable applications including but not limited to

Decentralised Finance: Compound, Aave, MakerDAO, UniSwap etc

Games & NFTs: Crypto Kitties, Crypto Punks

Oracles: Chainlink

Marketplaces: Opensea, Golem and more!

Effectively, Ethereum has, and continues to deliver on its original promise, i.e. expanding on Bitcoin and building so much more. While the journey has been incredible to witness, Ethereum has also had many issues that it has had to address and continues to address. These are pertaining to;

Scalability:

Since Eth blocks are created sequentially, the number of transactions per second is limited, making the blockchain slow.

This created issues when the network was congested, resulting in a really high gas fees.

As more people build on ETH, each node of the network is required to store the state of the entire network, making it increasingly challenging to keep track of all the balances.

PoW

As a PoW Blockchain, Ethereum consensus was at risk of centralization. Computational power requires resources that concentrate block production in the hands of a few.

The computational cost of a PoW consensus increases the cost of using the network.

EVM

The Ethereum Virtual Machine was designed specifically with Ethereum in mind and so it inherited all of the scalability issues that Ethereum has/had.

Similarly for Solidity, the smart contract language for Ethereum, requires developers to learn a DSL to engage.

How have these limitations been addressed?

These limitations created opportunity for new Blockchains to come up. Ethereums performance and scalability issues created a window for a host of new, Monolithic blockchains that solved Ethereums shortcomings to incorporate even more use cases. These VMs can be categorised by their compatibility with the EVM.

Non EVM

Solana:

Solana’s goal was to provide the most performant blockchain in terms of throughput, while making assurances around credible neutrality and decentralisation. Despite criticism of being a “VC chain”, Solana is now clear in terms of its scalability, throughput and performance, whilst remaining sufficiently decentralised.

This goal was achieved thanks to its parallelised tx processing (known as sharding). Solana runs its own Virtual Machine, called Solana Runtime.

EVM

Avalanche

While Solana (and others) opted to be incompatible with EVM, blockchains like Avalanche went out on their own, but chose to retain compatibility with EVM.

This means that smart contracts written for Ethereum can be deployed on Avalanche too, with “superior” scalability and throughput.

Ethereum Layer 2 Scaling

New, monolithic blockchains like Solana and Avalanche attempted to solve Ethereum’s scalability challenges by launching their own ecosystem. However, Ethereum itself aimed to address its own shortcomings by encouraging transactions to only be settled on Ethereum’s Beacon chain.

Layer 2 (L2) solutions on Ethereum refer to secondary protocols or systems built on top of the Ethereum mainnet with the primary purpose of enhancing the network's scalability, speed, and cost-effectiveness. Layer 2 solutions exist to address the limitations of the Ethereum network, primarily its capacity to process transactions and smart contracts. The first Layer 2 solution for Ethereum was the "Plasma" framework, introduced by Joseph Poon and Vitalik Buterin in 2017.

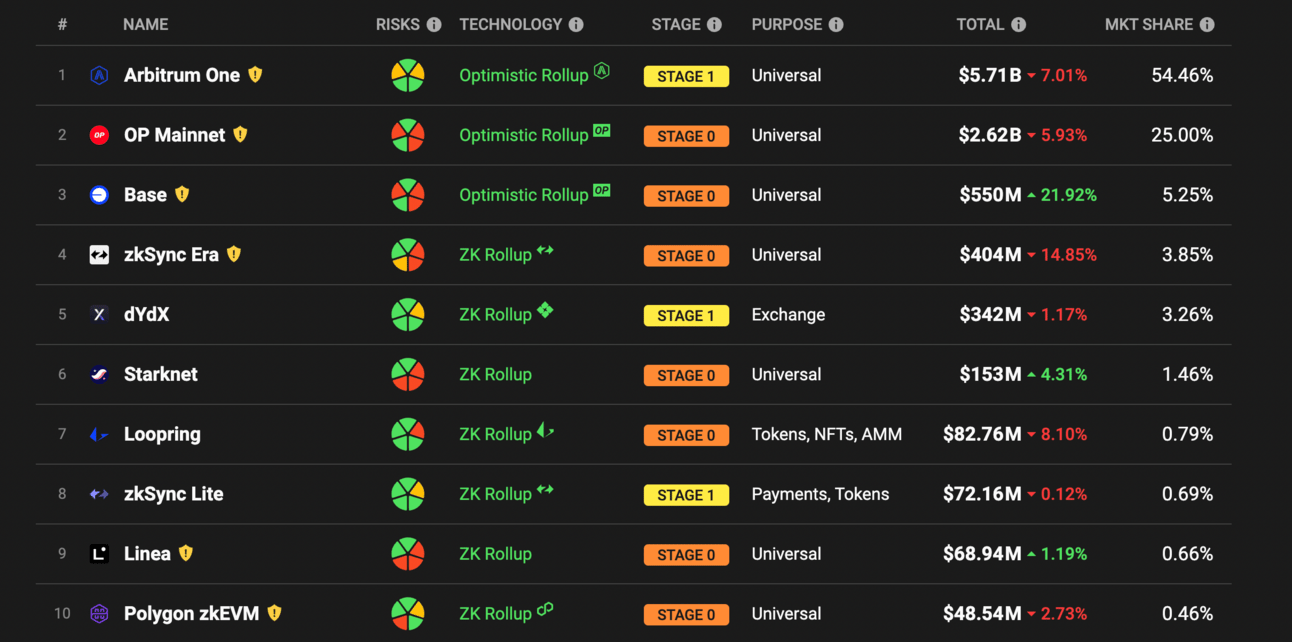

Today, the Ethereum layer 2 ecosystem is booming. Roll ups choose between tradeoffs, which is best analysed here.

If you want to dive deeper into Layer 2 scaling solutions, I strongly recommend watching the video below.

v2.5: Modularised Programmable Settlement

(UNBUNDLING Monolithic Blockchains to Enhance Scalability)

Ethereum’s scalability challenges were addressed by new blockchains running on the same VM, new chains running a completely different VM, and moving transactions off Ethereum L1, to ETH L2s.

What this has created is a systemic fragmentation, economic inefficiency, centralisation vectors and, most annoyingly, tribalism.

The reason why I call this wave v2.5 is because it is not adding a functional quantum leap the way smart contracts did to Bitcoin script. The design space for v2.5 is

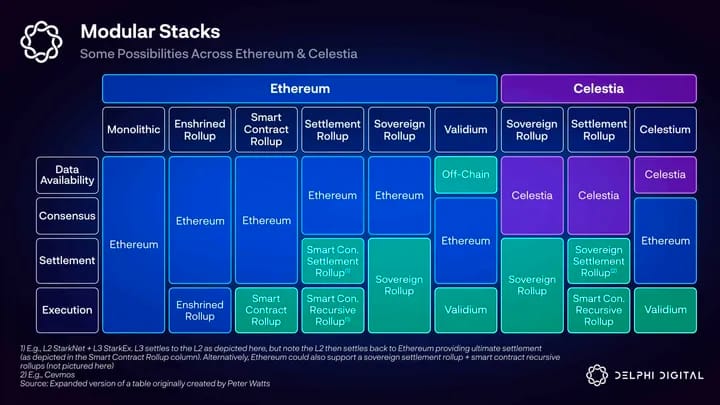

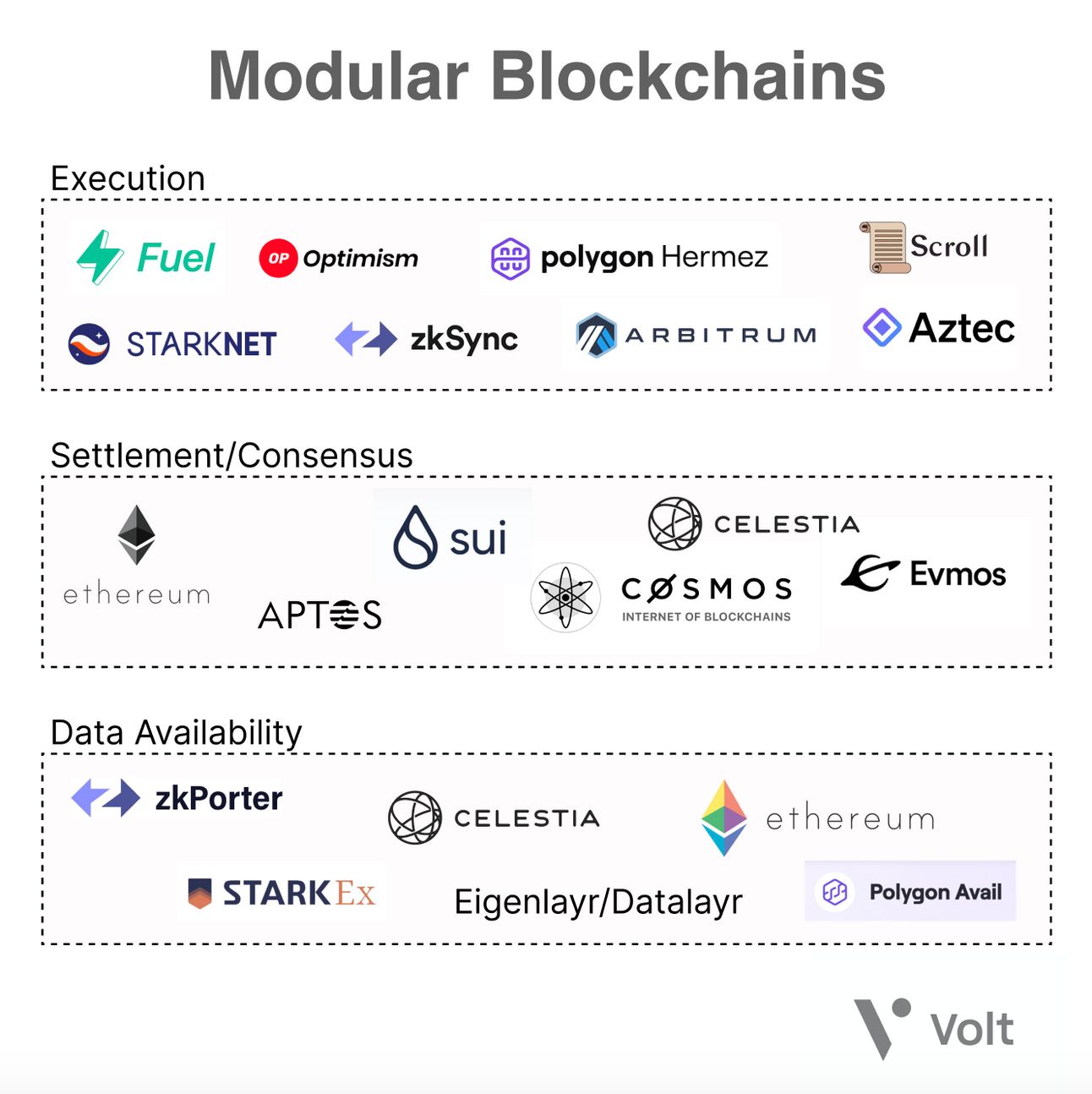

A) Modular Blockchains

I won’t dive too deep into this, but it effectively allows developers to design better experiences for their users, by taking the best of all that is available. Rollups differ in their design choices that are including, but not limited to Execution, Settlement and Data Availability. Read more about Modular Blockchains here. Below is a brief explainer on the layers on a blockchain!

Execution (eg: L2’s like Base, Arbitrum)

Users also typically interact with the blockchain through this layer by signing transactions, deploying smart contracts, and transferring assets.Settlement (eg: L1 Ethereum, Solana, Celestia)

The settlement layer is where the execution of rollups is verified and disputes are resolved.Consensus (eg: L1 Ethereum, Cosmos, Sui, Aptos etc)

The consensus layer of blockchains provides ordering and finality through a network of full nodes downloading and executing the contents of blocks, and reaching consensus on the validity of state transitions.Data Availability (eg: Ethereum, Eigenlayer, Celestia)

The data required to verify that a state transition is valid should be published and stored on this layer. This should be easily verifiable in the event of attacks where malicious block producers withhold transaction data.

B) Interoperability Protocols

With the proliferation of chains and roll ups, interoperability has become a major focus. Interoperability protocols provide the basis for siloed blockchains to send and receive messages to each other. This messaging serves as the back bone of asset transfer, and unlocks liquidity across different chains.

Bridges also vary in terms of the trust assumptions they make. To understand more about the bridge trilemma, please read this.

Layer zero is one of many players in a now increasingly hot interoperability space.

If you are exhausted by the sheer number of protocols, roll ups, bridges, oracles and you feel like we have more protocols than users, you are not alone. I do, however, disagree that we have more infrastructure than we need. Infrastructure still has tremendous room to improve from where we are today.

What are the limitations of v2.5

Challenging UX:

There are hundreds of “pathways”, with seemingly no directions, and a dark forest of dangers (MEV, exploits, Gas fees). Users today have to know how to get where they want to get.

The additional complexity of every additional roll up is yet to be abstracted away for end users en masse.

While roll-ups have proven to be composable on the settlement layer, they still feel foreign to an uninitiated user.

Centralisation Vectors:

To address this “multiple pathways” dilemma, most dapps today have a centralised execution pathways. Eg: when you make a swap on a DEX, there is no decentralised counterparty discovery. Most dapps have centralised execution of user “intents”.

Furthermore, many roll-ups still have centralised sequencers, which means that they can order transactions as they see fit. This is the largest source of corrosive MEV.

Limited Composability

While modularity has increased the design space of what is possible, there is still limited composability across domains, due to developer complexity.

With each roll up running their own sequencer, it is not possible to make atomic inclusion guarantees for cross chain interactions.

Today, a developer is forced to think in terms of single domains as there is still complexity when it comes to deploying an application to multiple domains and retaining composability.

Make it make sense!

To summarise where we are today, we have unbundled every layer of the blockchain stack. This means we can now have transactions executed on a different chain, published on another and settled on another.

While these changes solve infrastructural bottlenecks, they have created UX problems and new centralisation vectors, while also limiting composability. These are the largest issues to address and being addressed by Blockchain 3.0!

v3.0: Intent Satisfaction i.e. SUAVE/Anoma

(UNBUNDLING domain specific Mempool and BUNDLING into 1 Unified Ledger)

Just to reiterate, each wave has begun where the previous waves lacked. BTC was created to decentralise economic value. Ethereum was created to make it easy to create programmability and applications on top of decentralised economic value. Newer blockchains and Layer 2’s were created to address Ethereum scalability.

The third wave is defined by reinforcing decentralisation and redefining UX.

Since a lot of you at this point have been wondering when you get some information on Intents, here it is finally.

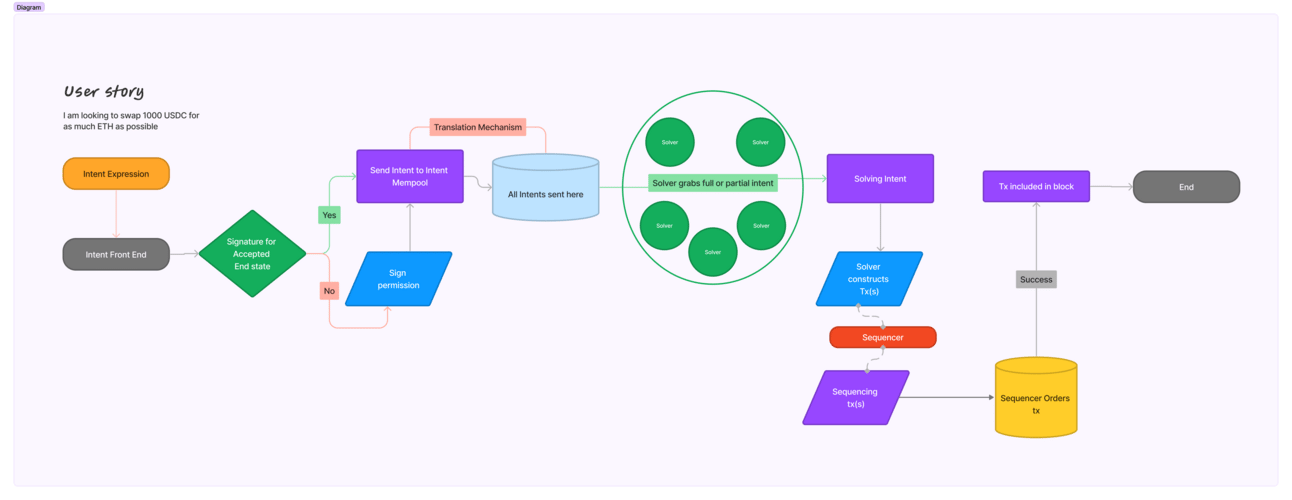

And now FINALLY- WTF ARE INTENTS!!!!

I want to swap USDC for ETH, and I want to get the best possible price. Oh one more thing, I also want to swap every month on the 30th. If ETH price falls 15% below todays price, then stop.

Intents are An Expression Of End State

This is an intent. Or rather, a bunch of intents. For a user to execute this intent today, they must have knowledge of protocols that can be used to swap USDC for ETH, compare the prices for each, and check the same every month on the 30th. Effectively, the user will be forced to initiate transactions and sign messages every month, find the best price across DEXs on chain and off chain to accomplish this. Automating this with EoA wallets would require your private key to be stored somewhere, which creates an unfavourable security paradigm.

Automation/Programmability

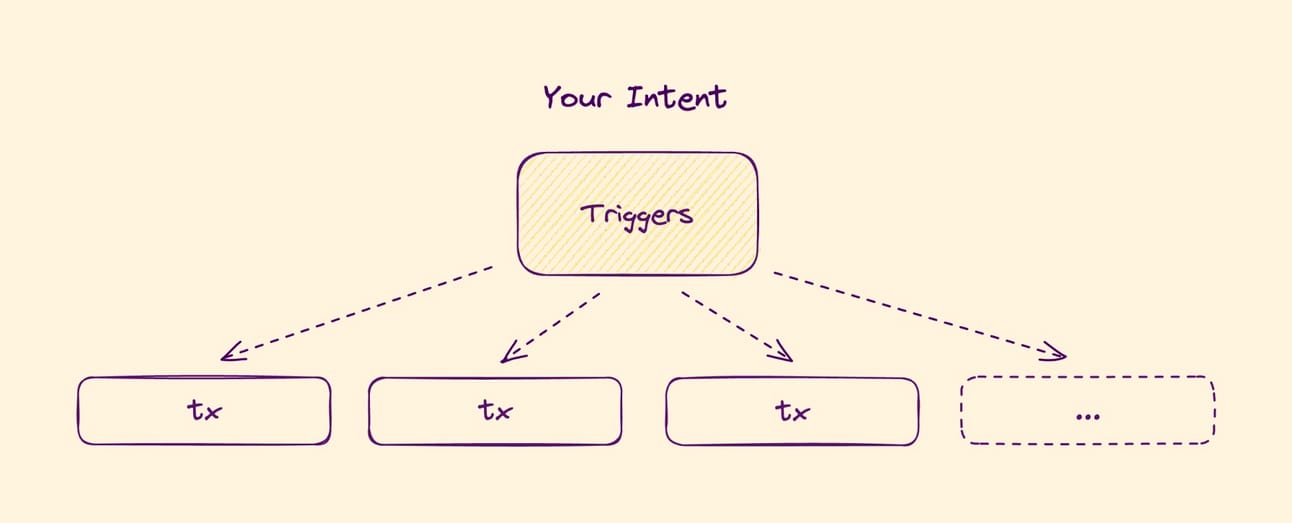

In blockchains today, the unit of activity on chain is a transaction. A transaction is imperative, while an intent is declarative. In the example above, we can swap ETH for USDC on Uniswap, and this would be a single transaction. However, our end state is not a single transaction. In fact, our end state is not defined!

A New Mempool, with Decentralised Counterparty Discovery

Intents Allow users to specify end states, without knowing how to get there. In intent centric architectures, these expressed end states are broadcasted to a new Mempool, where “solvers” compete to satisfy your intent fully or partially.

Intents allow us to express destinations or outcomes, not transactions! This means that for an end user, you only have to create an intent and sign it. Once it is submitted to the intents mempool, counterparties will compete/collaborate to provide you the best possible price to execute your intents. This outsources the hassle of constructing pathways to specialised actors, who then compete to provide you the best execution.

In an intent centric world, a solver will determine whether it makes sense to use CoW Swap or UniswapX to fulfil your order. The intents mempool provides a layer to construct and coordinate actions, markets and programmability on a unified ledger.

How an intent flows

Layers of Intent Centric Architectures

Decentralisation is complicated. The figure above shows the journey of an intent from expression to settlement! Intent centric architectures involve multiple layers, and therefore multiple opportunities across these layers. Let us look at the high level. (PS- I will likely write about each individual layer and the players inside it)

Intent Expression: This is the first step, where a user defines their desired end state. Front ends and interfaces!

Intent Translation: Translation of a user intent into a Domain Specific Language/Language that can be understood by the VM

Intent Solution: Solvers take the intent from the intent mempool and now create solutions a.k.a full or partial transactions to fulfil the intent.

Intent Execution: The Intents are executed on the respective domains, represented as transactions on the destination chain(s)

Intent Settlement: Users confirm that they have reached the end state as they intended.

Enabling Tech

I think it is fair to say that crypto UX has been a major stopping point for mass adoption. Public-private keys and fragmented liquidity, with a different tool kit for each domain has made it a nightmare. While intents open a whole new design space, there are already entry points in place that make the transition to intent centricity very smooth.

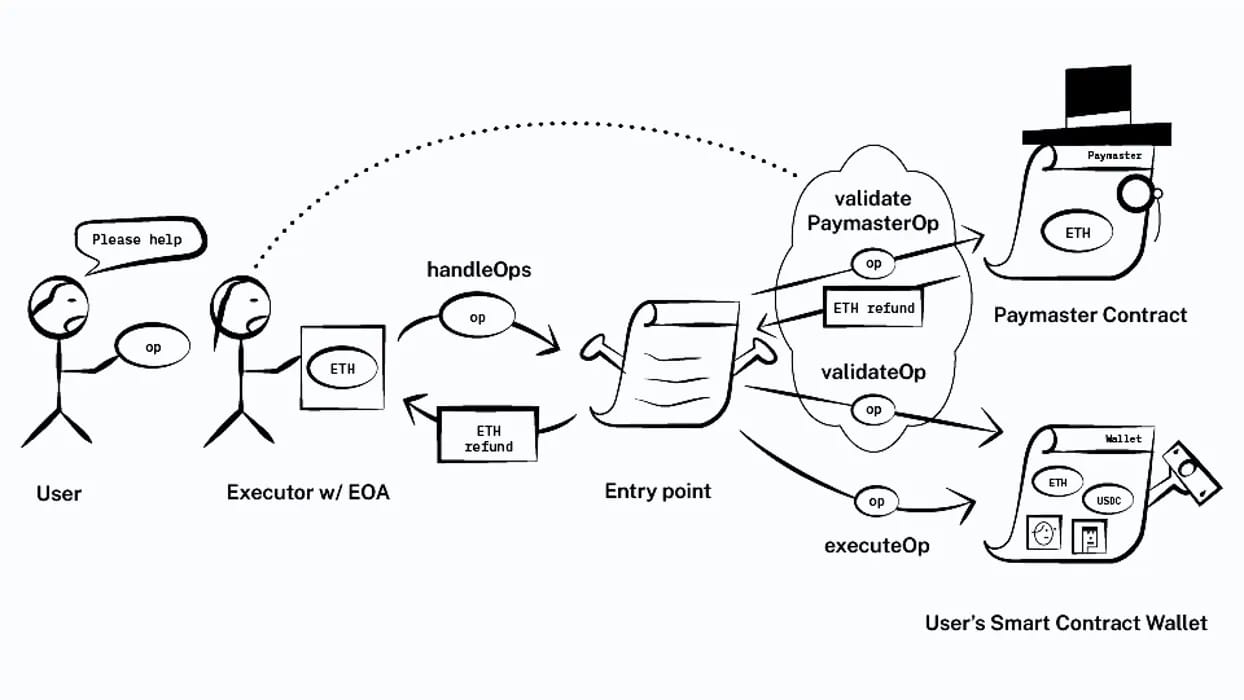

Account Abstraction, which Uma Roy from Succinct describes as “opinionated intents”.

Account Abstraction (ERC 4337)

There are way too many resources that can explain account abstraction better than I can, but what is of note here is that with ERC 4337 implemented, you no longer need an Externally owned Account (EoA) to initiate outcomes on chain. You can use smart contracts, or smart contract wallets (SCW) to do so.

Account Abstraction is a spec for a Generalized Smart Contract Wallet.

For those of us that have ever swapped on DEXs using MM, we know the pain with continuously signing messages and transactions.

s/o Bastian Wetzel

So effectively, Smart Contract Wallets allow us to wrap complex execution logic around user transactions with respect to the following domains

Social recovery,

conditionality around allowances for different applications and

paying gas fees in different tokens.

This is why Uma describes them as opinionated!

Aggregation

In a sea of multiple DEXs and protocols across different domains, transaction execution has historically been limited to a single dapp.

Aggregation protocols have stepped in to solve this liquidity fragmentation and attempt to provide superior UX for transaction execution.

UniswapX is a big example of this. UniswapX aggregates liquidity across all its pools as well as through off-chain sources to give users the best possible price!

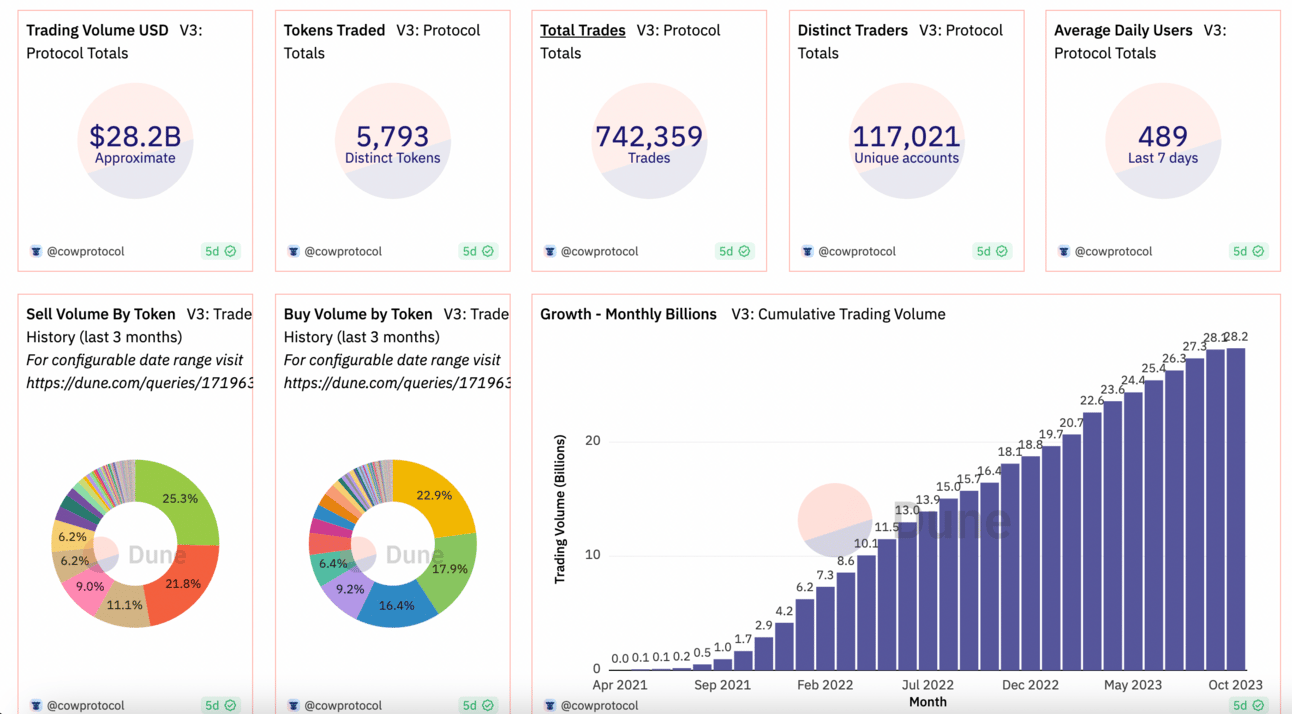

CowSwap does this and even aggregates P2P “wants”, to match people.

Aggregators solve for the multiple pathways problem when it comes to providing the users better UX for execution.

These aggregation protocols represent a critical component of intent centric architectures!

Aggregation protocols find us the best pathways for executing our desired end state. A combination of both gives us much better UX than the type of UX we have been used to so far.

This also tells us that users are increasingly concerned with end-states. The usage of aggregation protocols is clearly indicative of this!

CoW Swap Stats!

3. Decentralised Sequencer

Sequencers are responsible for ordering transactions that are then included on-chain. Centralised sequencers are responsible for most of the corrosive MEV. Today, Optimism and Base, among other roll ups, run centralised sequencer. This is troubling because the sequencer on the L2 can unilaterally include, exclude or reorder tx’s for there own personal gain.

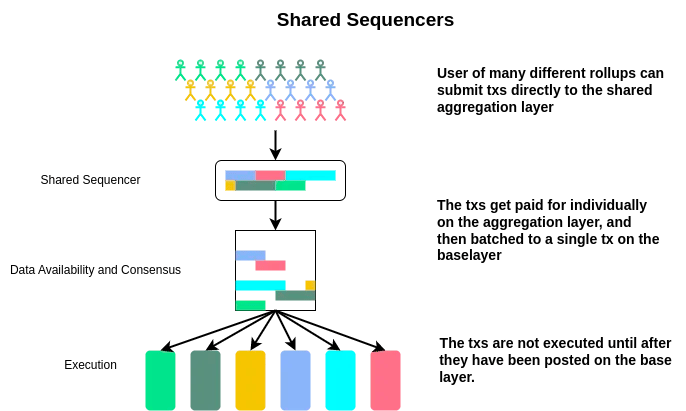

Fortunately, Shared Sequencers have already started to gain a lot of steam. A shared sequencer effectively acts like a network that is common across many roll ups, whose job is to order transactions and send them to the roll up for blocks to be created.

Given that the intents mempool sits on top of all L1’s and L2’s, Shared Sequencers increase the atomicity guarantees for cross domain intents. Shared Sequencers effectively serve a vital role in atomic inclusion of transactions across the domains that share the same sequencer.

Imagine the sequencer of Optimism mainnet and Arbitrum is the same. This would allow us to have much better guarantees over intent pathways that involve these 2 chains.

Espresso Systems, Astria, Suave and others are competing to play the shared sequencer role across domains in the L2 ecosystem. I will be doing a deeper dive into the role of sequencers in MEV next week, so stay tuned!

With these 3 enabling technologies already in production, they pave the way for intents to be expressed through SCWs, fulfilled by routing through the best possible aggregtors, and have better atomicity guarantees for cross chain intents thanks to decentralised sequencers!

Before I leave you

Why do Intents Matter?

1. UX

Allowing end users to express end states, without knowledge of pathways, is a major step to mass adoption.

Furthermore, being able to do so while making no additional trust assumptions, thanks to smart contract wallets, is another major win.

I like to use the analogy of Google Maps. Google Maps allows us to find where we want to go without having to feel like we need to know anything other than what is left and what is right.

At the end of the day, Google is a centralised organisation that will use maps to steer us in the direction of ad revenue for them.

Now imagine a decentralised google maps, where you put a destination, and there are parties that are competing to chart out the best route for you, on the basis of your goals.

This would be a rudimentary way to understand intent centric architectures!

2. Decentralisation

Decentralising Counterparty Discovery

The combination of Account Abstraction and aggregation has brought us quite far. However, as I mentioned previously, counter-party discovery is centralised. This means that even though UniswapX aggregates on and off chain liquidity to provide the best execution pathways for my swaps, they are the arbiters of pathways.

At some point, these protocols will be required to turn on the profitability tap, and in that situation, we cannot be certain that we are, in fact, getting our interests served credibly neutrally. The process through which we discover the best possible counter-parties to take us to our end state is centralised. This changes with intents as the solvers compete economically to help us discover the best counterpartie(s) to accomplish our end state.

3. Interoperability

Intent centricity assumes multi domain atomicity as it aims to unbundle the mempool from individual domains and create a single, unified ledger. This is buoyed by the focus on shared sequencers that give better atomic inclusion guarantees for cross chain transactions.

This is great for users and apps alike because you are no longer locked into a single domain. Coupled with interoperability protocols, this unlocks greater liquidity too!

In Summary

Intents pave the way for infrastructure to be abstracted away. This allows applications to no longer think too deeply about infrastructure and paves the way for multi-domain applications.

End users get a remarkable upgrade on UX, which is exciting as it makes crypto far more accessible than it has ever been in 15 years of existence. At the end of the day, the goal is to provide society with better primitives- ones that are censorship resistant, trustless, inflation resistant, geography agnostic and highly composable.

While we’ve gone through our phase of hopium, fluff, masquerading and naysaying, we are finally arriving at a place where applications can make the case to build on-chain by default because its BETTER, not because of ponzinomics.

Next Steps

Head over to our Notion Site, the largest publicly available resource on Intents

Subscribe to Abstract(ed) to stay in the loop

Follow me on twitter

If you want me to add material to the Notion site, reach out to me on twitter.

S/o to Adrian Fink, Christopher Goes, Awa Sun Yin from the Anoma team for all their talks on Intent centric architectures. S/o to Robert Miller, Hasu, and the Flashbots team for all their talks on Intents. S/o to Jon Charbonneau and the dBa team for their research into Intents. S/o to Alec Chen from Volt Capital for the research into Modular Roll ups.

s/o Claude, ChatGPT